Grafana Alloy

1. Overview

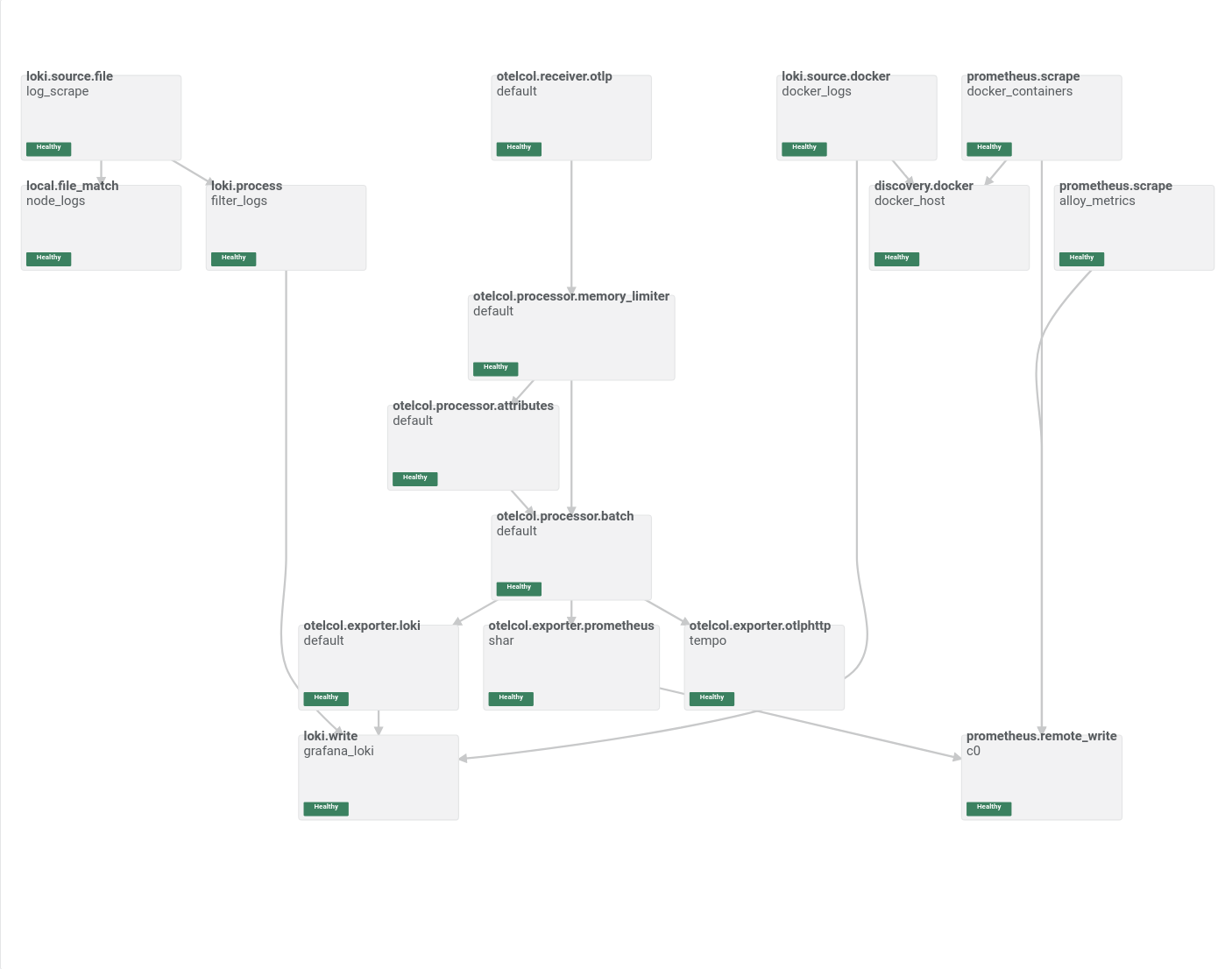

This setup uses OpenTelemetry Collector to ingest, process, and export telemetry data. It integrates with Grafana Loki for logs, Prometheus for metrics, and Tempo for traces. The configuration includes:

- Logging: Configures logging levels and formats.

- Receivers: Ingest telemetry data via OTLP, Docker, and file-based logs.

- Exporters: Sends processed data to backend systems (Loki, Prometheus, Tempo).

- Processors: Enhances data (e.g., adds attributes, filters noise).

- Sources: Defines specific log and metric scraping.

Use a config.alloy file for writing these configurations to...

2. Logging

Configure logging for OpenTelemetry Collector:

logging {

level = "info"

format = "logfmt"

}

Options:

level: Adjust verbosity (e.g.,debug,info,warn,error).format: Specify format (e.g.,json,logfmt).

3. Receivers and Discovery

OTLP Receiver

The OTLP receiver handles incoming metrics, logs, and traces.

otelcol.receiver.otlp "default" {

grpc {

endpoint = "0.0.0.0:4317"

}

http {

endpoint = "0.0.0.0:4318"

}

output {

// This will send all recived items into a memory limiter, more info below

metrics = [otelcol.processor.memory_limiter.default.input]

logs = [otelcol.processor.memory_limiter.default.input]

traces = [otelcol.processor.memory_limiter.default.input]

}

}

Local File Matcher

Match log files from the host system.

local.file_match "node_logs" {

path_targets = [{

__path__ = "/var/log/*.log",

node_name = sys.env("HOSTNAME"),

cluster = sys.env("CLUSTER"),

}]

}

Docker Discovery

Discover Docker container logs. (optional)

discovery.docker "docker_host" {

host = "unix:///var/run/docker.sock"

}

4. Exporters

Logs

Export logs to Grafana Loki. assuming loki is running on a local container on the same network

loki.write "grafana_loki" {

endpoint {

url = "http://loki:3100/loki/api/v1/push"

}

}

// For Otel Format Logs

otelcol.exporter.loki "default" {

forward_to = [loki.write.grafana_loki.receiver]

}

Metrics

Export metrics to Prometheus.

prometheus.remote_write "c0" {

endpoint {

url = "http://prometheus:9090/api/v1/write"

}

}

// For otel format metrics

otelcol.exporter.prometheus "shar" {

forward_to = [prometheus.remote_write.c0.receiver]

}

Traces

Export traces to Tempo.

otelcol.exporter.otlphttp "tempo" {

client {

endpoint = "http://tempo:4318"

}

}

5. Sources

Log Sources

File-based Logs

loki.source.file "log_scrape" {

targets = local.file_match.node_logs.targets

forward_to = [loki.process.filter_logs.receiver]

tail_from_end = true

}

Docker Logs

loki.source.docker "docker_logs" {

host = "unix:///var/run/docker.sock"

targets = discovery.docker.docker_host.targets

labels = {

"container_id" = sys.env("HOSTNAME"),

// "container_name" = "__meta_docker_container_name"

}

forward_to = [loki.write.grafana_loki.receiver]

}

6. Processors

Log Processors

Filter noisy logs before sending to Loki.

loki.process "filter_logs" {

stage.drop {

source = ""

expression = ".*Connection closed by authenticating user root"

drop_counter_reason = "noisy"

}

forward_to = [loki.write.grafana_loki.receiver]

}

add attributes as labels.

otelcol.processor.attributes "default" {

action {

key = "loki.attribute.labels"

action = "insert"

value = "event.domain, event.name"

}

action {

key = "loki.resource.labels"

action = "insert"

value = "service.name, service.namespace"

}

output {

logs = [otelcol.processor.batch.default.input]

}

}

Memory Limiter

Limit memory usage for telemetry processing.

otelcol.processor.memory_limiter "default" {

check_interval = "1s"

limit = "1GiB"

output {

metrics = [otelcol.processor.batch.default.input]

// Send logs to get attribute labels

logs = [otelcol.processor.attributes.default.input]

traces = [otelcol.processor.batch.default.input]

}

}

Batch Processing

Batch data before exporting.

otelcol.processor.batch "default" {

output {

metrics = [otelcol.exporter.prometheus.shar.input]

logs = [otelcol.exporter.loki.default.input]

traces = [otelcol.exporter.otlphttp.tempo.input]

}

}

7. Metrics Scraping

Alloy Metrics Scrape

Scrape metrics from a local service.

prometheus.scrape "alloy_metrics" {

targets = [{

__address__ = "127.0.0.1:12345",

}]

forward_to = [prometheus.remote_write.c0.receiver]

}

Docker Container Metrics

Scrape metrics from Docker containers. (optional)

prometheus.scrape "docker_containers" {

targets = discovery.docker.docker_host.targets

forward_to = [prometheus.remote_write.c0.receiver]

}

Deploy

version: '3.8'

networks:

proxy:

external: true

volumes:

data:

services:

alloy:

image: grafana/alloy:latest

container_name: alloy

ports:

- "12345:12345" # Map port 12345

- "4318:4318" # Map port 4318

- "4317:4317" # Map port 4317

networks:

- proxy

volumes:

- //var/run/docker.sock://var/run/docker.sock:ro

- ./config.alloy:/etc/alloy/config.alloy

- data:/var/lib/alloy/data

- /var/log:/var/log

command: >

run

--server.http.listen-addr=0.0.0.0:12345

--storage.path=/var/lib/alloy/data

/etc/alloy/config.alloy